Everlyn AI (LYN) is the world’s first open-source foundational video model and super-agential multimodal ecosystem. Designed to deliver an open dream machine to the world, this platform is reshaping the boundaries of video artificial intelligence. Its flagship open-source model, Everlyn-1, transforms the capabilities of video AI in terms of output duration, interactivity, generation latency, and hyper-realistic visual quality. Lyn’s video creation engine and platform, Everworld, is revolutionizing how people engage with photorealistic virtual representations of themselves through personalized and interactive video agents capable of performing any task. Together, Everlyn-1 and Everworld will fundamentally change what individuals can achieve online, taking over tasks and activities that people either prefer not to do or are unable to do.

What is Everlyn AI (LYN)?

Lyn was founded by a team of leading generative AI experts, including professors from Cornell University, Oxford University, Stanford University, University of Central Florida (UCF), Hong Kong University of Science and Technology (HKUST), Mohamed bin Zayed University of Artificial Intelligence (MBZUAI), and Peking University (PKU), as well as former leaders from Meta, Deepmind, Microsoft, Google, and Tencent, and current PhD students in generative AI.

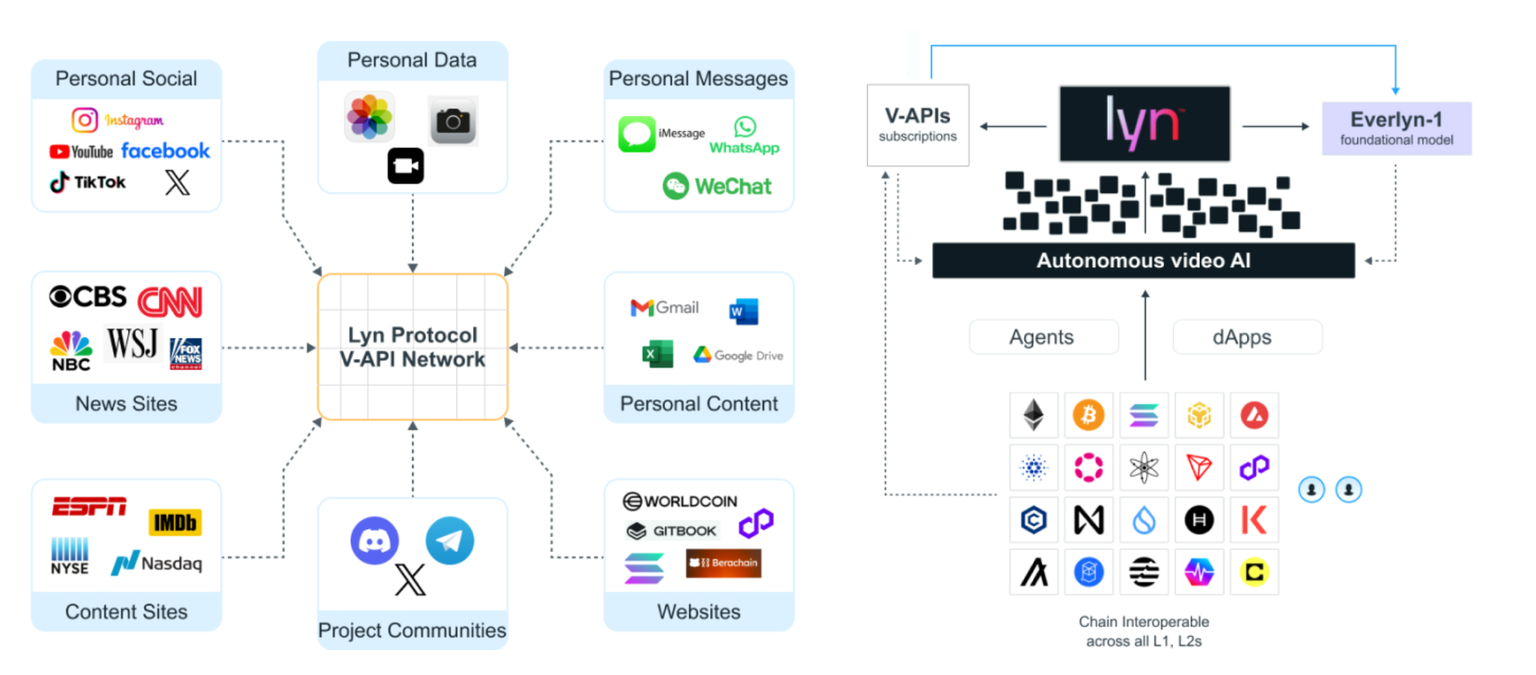

Lyn introduces human-centric video agents, called Lyns, with photorealistic, multimodal agential capabilities. These agents operate on a decentralized protocol powered by a vast network of Agent APIs (AAPIs). Lyns are expected to replace the majority of human activity online in the coming years. Everlyn-1 is supported by research in autoregressive modeling, tokenization, MLLM hallucination reduction, and data pipelining. Additionally, technological advancements such as hyper-contextual data modules, personalized data feed partitioning (DataLink), hybrid in-cloud on-device model deployment, and Agent API protocol routing (CommandFlow) form the foundation of the platform.

Purpose of Everlyn AI

Lyn believes that virtual AI (AI-powered virtual reality) will drive most technological and scientific breakthroughs, serving as a testbed for the physical world and ultimately advancing humanity and civilization. An open-source and decentralized virtual AI infrastructure and engine will make everyone an equal contributor and beneficiary in this exciting future.

Lyn’s vision is a future where physical reality becomes indistinguishable from the virtual. In this future, an ultra-low-latency generative AI engine can conjure the impossible for anyone, anywhere. This makes everyone on Earth the creator, director, and owner of their own video or narrative that represents their life. Virtual experiences help accelerate the advancement of the physical world, enabling human and societal evolution to unfold instantly.

Lyn will be the first ecosystem to empower billions of people as equal participants in this exciting future. Video generative AI represents the first step toward this future. Everything we see and experience today is an abstraction of video—a sequence of unfolding moments that constitute what we call life. Humanity and civilization develop from lived experiences. However, our lives are constrained by the laws of physics, the limitations of the physical world, and the boundaries of human existence. These constraints significantly hinder the progress of humanity and civilization.

How Does Everlyn AI Work?

At the core of Lyn are the Everlyn-1 model and the Everworld platform. Everlyn-1 produces high-quality, long-duration, and hyper-realistic videos using autoregressive modeling and tokenization techniques. Everworld is a decentralized platform where video agents are created and operated using LYN tokens. These agents perform tasks on behalf of users, interact with other agents, and acquire new capabilities from the AAPIs store.

Everlyn-1 Model:

- Long-duration, temporally consistent video generation with autoregressive modeling.

- Data compression with Vector Quantization (VQ), achieving a 256x compression ratio.

- Advanced data pipeline and caption quality for hallucination reduction.

- Bridge-TTS and diffusion-based models for audio-driven video generation.

Everworld Platform:

- Decentralized protocol powered by LYN tokens.

- Agents perform tasks on behalf of users (e.g., reservations, shopping, communication).

- DataLink provides hyper-contextual data management; CommandFlow directs task execution.

- The AAPIs store allows developers to add new features.

Video Agents:

- Personalized photorealistic representations using user-specific data (voice, image).

- Real-time interaction, facial expressions, and lip synchronization.

- Financial transactions (buying, selling, payments) with decentralized wallets.

- Encrypted data lockers and on-chain storage for user data privacy.

Everlyn AI Use Cases

Lyn offers a wide range of applications through video agents:

- Daily Tasks: Reservations, shopping, calendar management, communication.

- Entertainment: Personalized movie recommendations, real-time previews.

- Education and Healthcare: Interactive education, health monitoring, medication reminders.

- Marketing and Communication: Social media management, customer service, virtual meetings.

- Personal Assistant: Continuous companionship, emotional support, hyper-local recommendations.

Usage Steps:

- Create a video agent on Everworld using LYN tokens.

- Personalize the agent with voice, image, or video.

- Add new capabilities from the AAPIs store.

- Allow the agent to autonomously perform tasks (e.g., shopping, reservations).

- Stake LYN tokens to unlock additional features or revenue sharing.

Advantages of Everlyn AI

- Open Source: Everlyn-1 is a foundational video model accessible to everyone.

- Decentralization: Full control over user data, privacy, and security.

- Personalization: User-specific photorealistic agents with real-time interaction.

- Scalability: Superpipeline-quantization and xDiT for 10x lower costs.

- Community-Driven: AAPIs store and LYN staking incentivize developers and users.

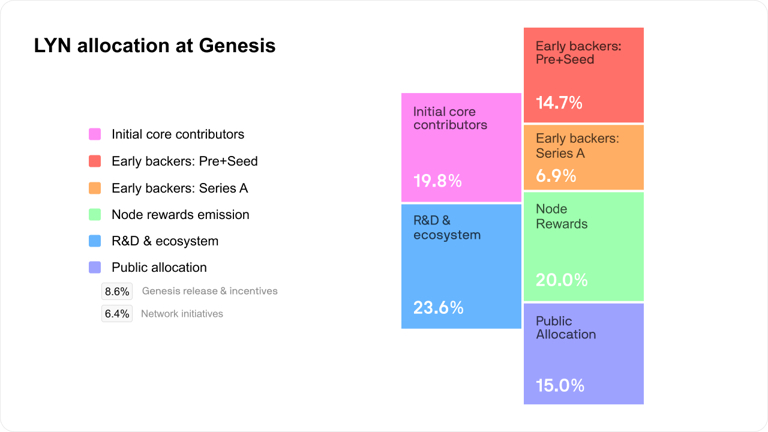

Everlyn AI (LYN) Tokenomics

Total Supply: 1 billion $LYN (at Genesis).

Distribution:

- Initial Core Contributors: 19.80%

- Pre-Seed Early Backers: 14.70%

- Early Backers: 6.90%

- R&D Ecosystem: 23.60%

- Node Rewards Emission: 20%

- General Allocation: 15%

Lock/Unlock:

- Gradual unlocking over three years with different schedules.

- Locked tokens can participate in staking, but rewards are only received upon unlocking.

Inflation:

- 10% in the first year, decreasing by 10% annually until reaching a perpetual 1% rate.

LYN Usage:

- Video agent creation and subscription fees.

- Gas fees for on-chain transactions.

- Financial transactions in agent wallets.

- Feature access through AAPIs purchase and staking.

- Staking for revenue sharing (agents or AAPIs).

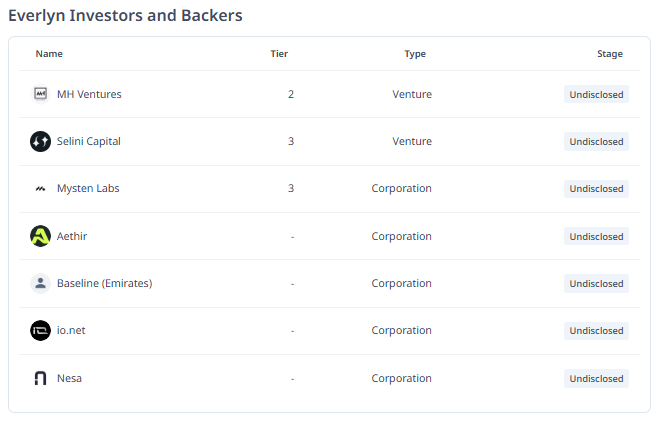

Everlyn AI Investors

Lyn raised $15 million on August 31, 2025. Investors:

- Tier 2: MH Ventures (Venture).

- Tier 3: Selini Capital (Venture), Mysten Labs (Corporate).

- Others: Aethir, Baseline (Emirates), io.net, Nesa (Corporate).

Everlyn AI Team

The Lyn team consists of leading AI researchers who have played pivotal roles in major industry innovations, including Google’s foundational video model Video Poet, Facebook’s foundational video model Make-a-Video, Tencent’s foundational video model, Meta Movie Gen’s most cited benchmark model Seeing and Hearing, the industry’s leading open-source video model Open-Sora-Plan, and OpenAI’s first real-time end-to-end humanoid agent video model Body of Her.

The Lyn team is led by Patrick Colangelo (Co-Founder) and Serge Belongie (Leader).

Official Links

You can also freely share your thoughts and comments about the topic in the comment section. Additionally, don’t forget to follow us on our Telegram, YouTube, and Twitter channels for the latest news and updates.